MAESTRO: Task-Relevant Optimization via Adaptive Feature Enhancement and Suppression for Multi-task 3D Perception

Abstract

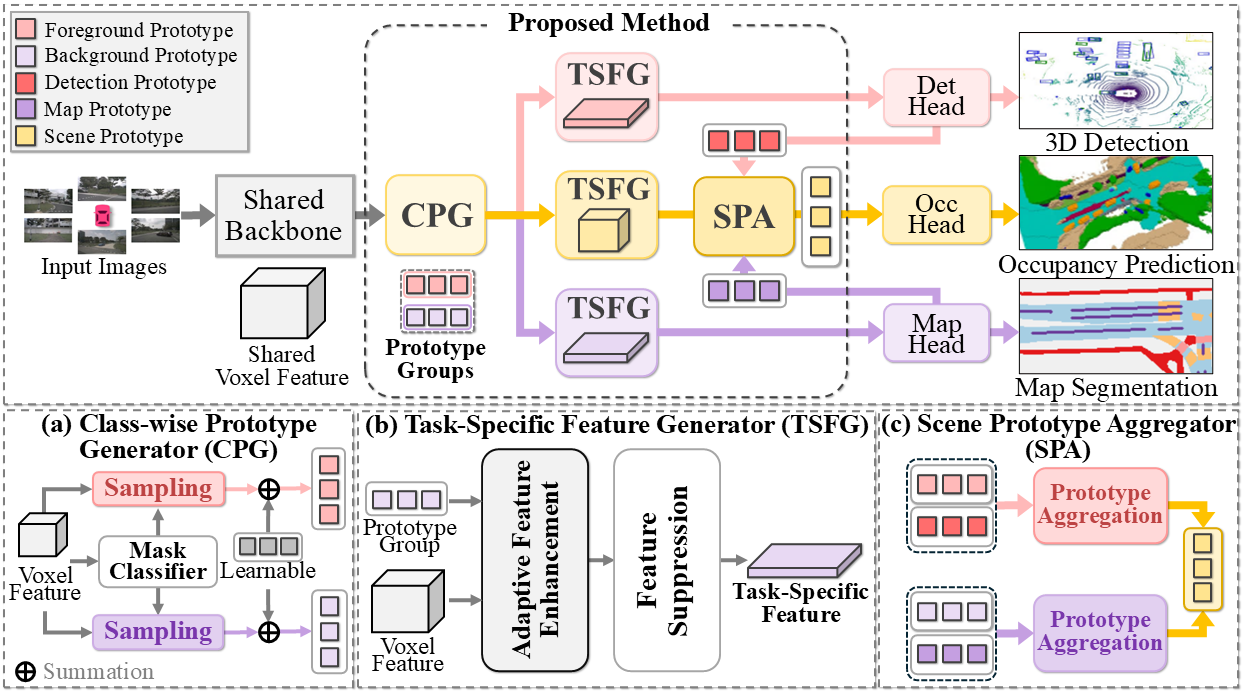

The goal of multi-task learning is to learn to conduct multiple tasks simultaneously based on a shared data representation. While this approach can improve learning efficiency, it may also cause performance degradation due to task conflicts that arise when optimizing the model for different objectives. To address this challenge, we introduce MAESTRO, a structured framework designed to generate task-specific features and mitigate feature interference in multi-task 3D perception, including 3D object detection, bird's-eye view (BEV) map segmentation, and 3D occupancy prediction. MAESTRO comprises three components: the Class-wise Prototype Generator (CPG), the Task-Specific Feature Generator (TSFG), and the Scene Prototype Aggregator (SPA). CPG groups class categories into foreground and background groups and generates group-wise prototypes. The foreground and background prototypes are assigned to the 3D object detection task and the map segmentation task, respectively, while both are assigned to the 3D occupancy prediction task. TSFG leverages these prototype groups to retain task-relevant features while suppressing irrelevant features, thereby enhancing the performance for each task. SPA enhances the prototype groups assigned for 3D occupancy prediction by utilizing the information produced by the 3D object detection head and the map segmentation head. Extensive experiments on the nuScenes and Occ3D benchmarks demonstrate that MAESTRO consistently outperforms existing methods across 3D object detection, BEV map segmentation, and 3D occupancy prediction tasks.

Overview

Multi-view images are processed by a shared backbone to generate a structured 3D voxel representation. The CPG generates foreground and background prototype groups, which guide the TSFG in refining task-relevant features for 3D object detection, BEV map segmentation, and 3D occupancy prediction. Task-oriented prototypes derived from the 3D object detection and BEV map segmentation heads are then integrated with the prototype groups via the SPA, forming Scene Prototypes. These Scene prototypes are subsequently processed by the occupancy decoder to produce the final 3D occupancy predictions.

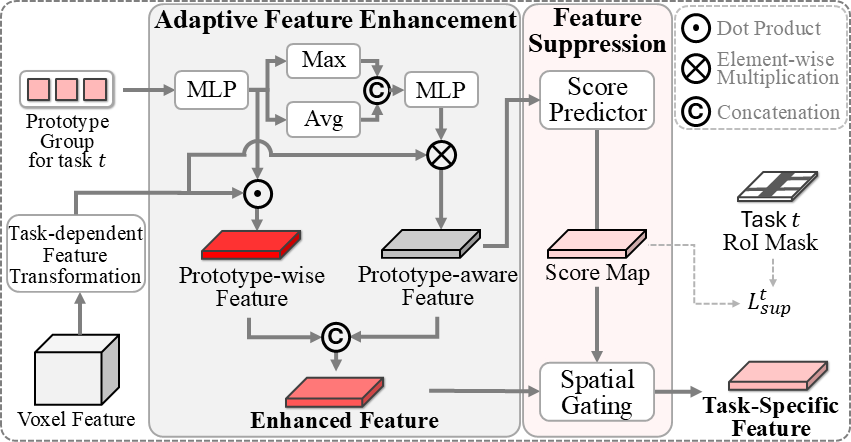

Detailed Architecture of TSFG

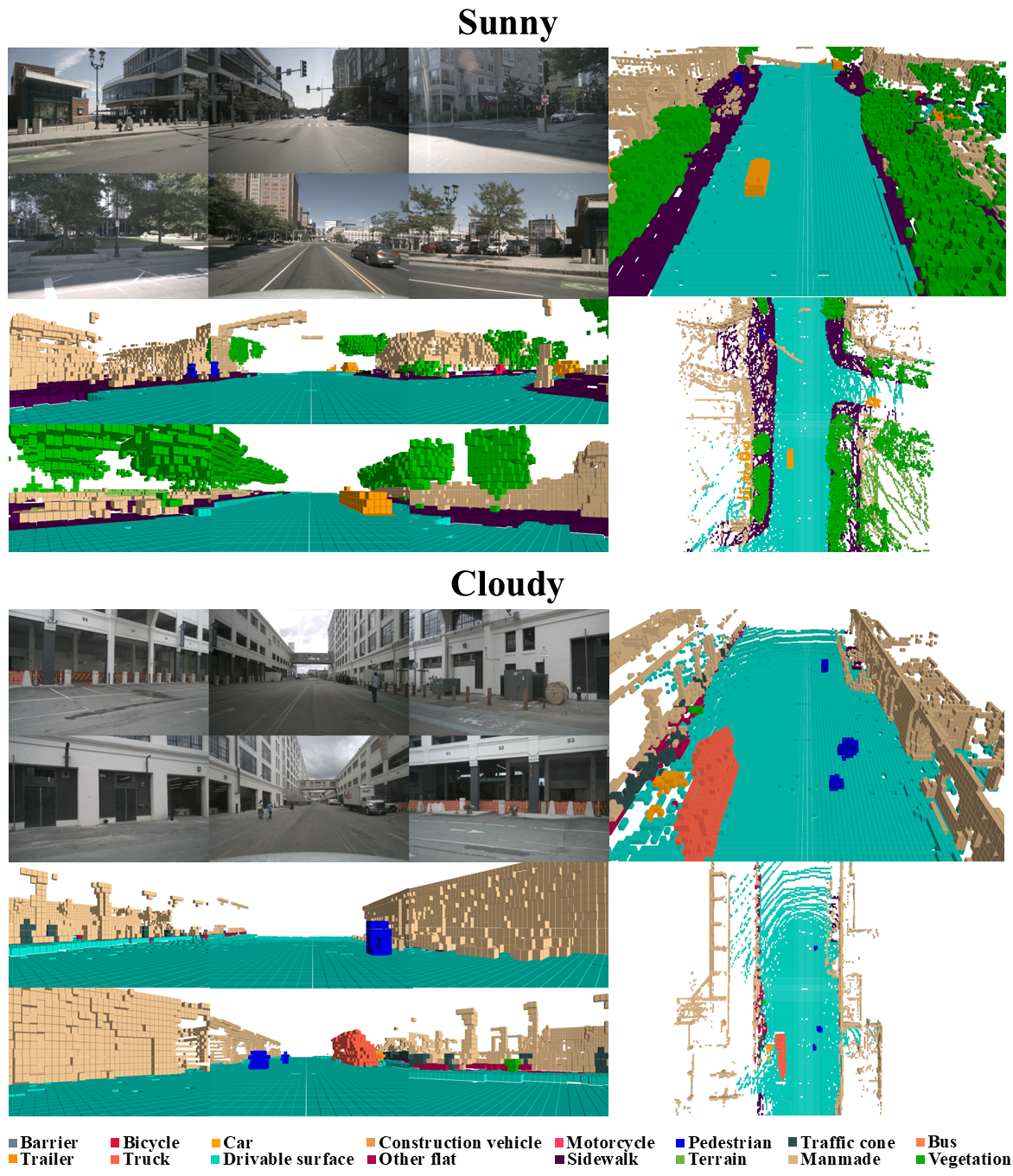

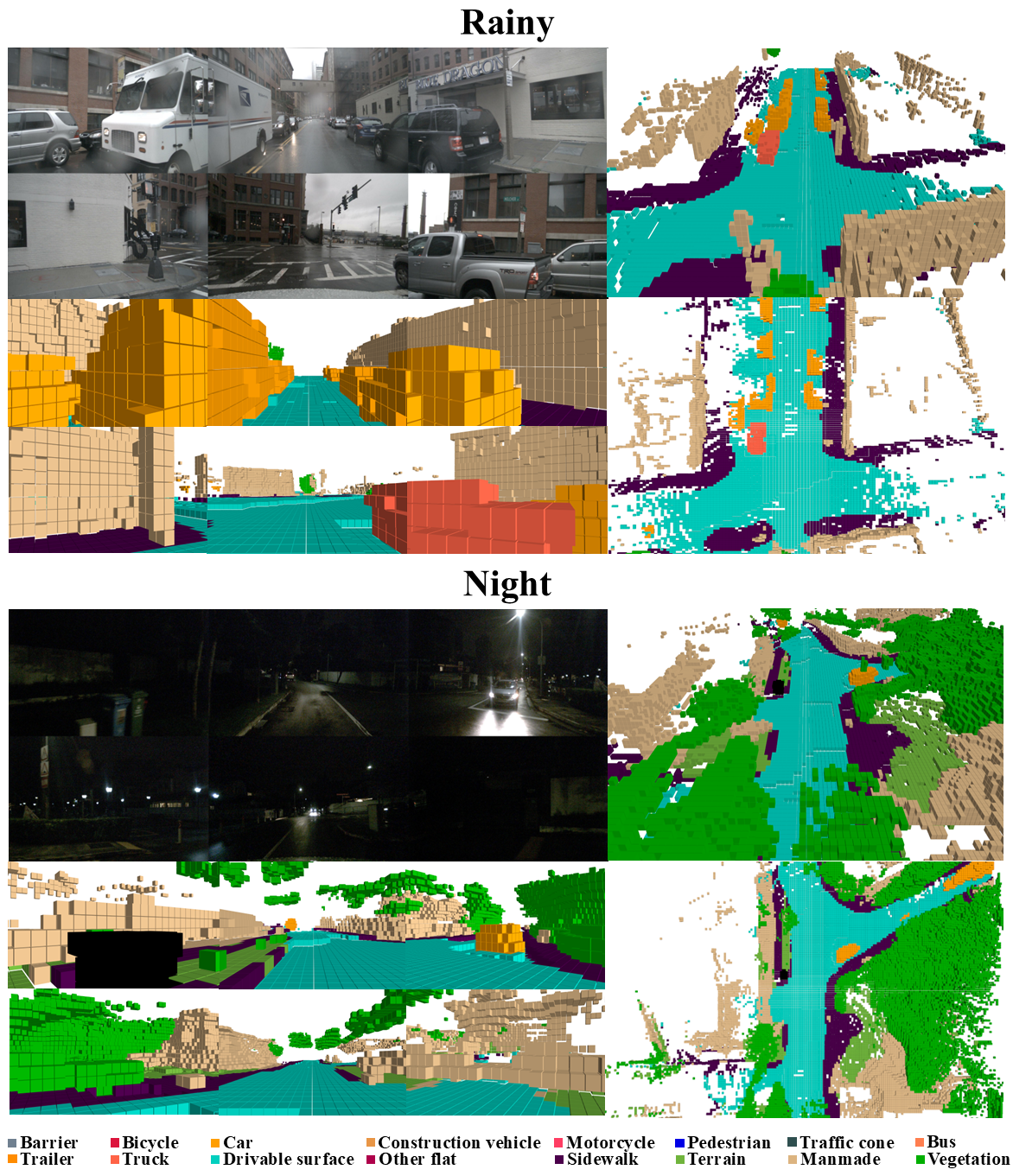

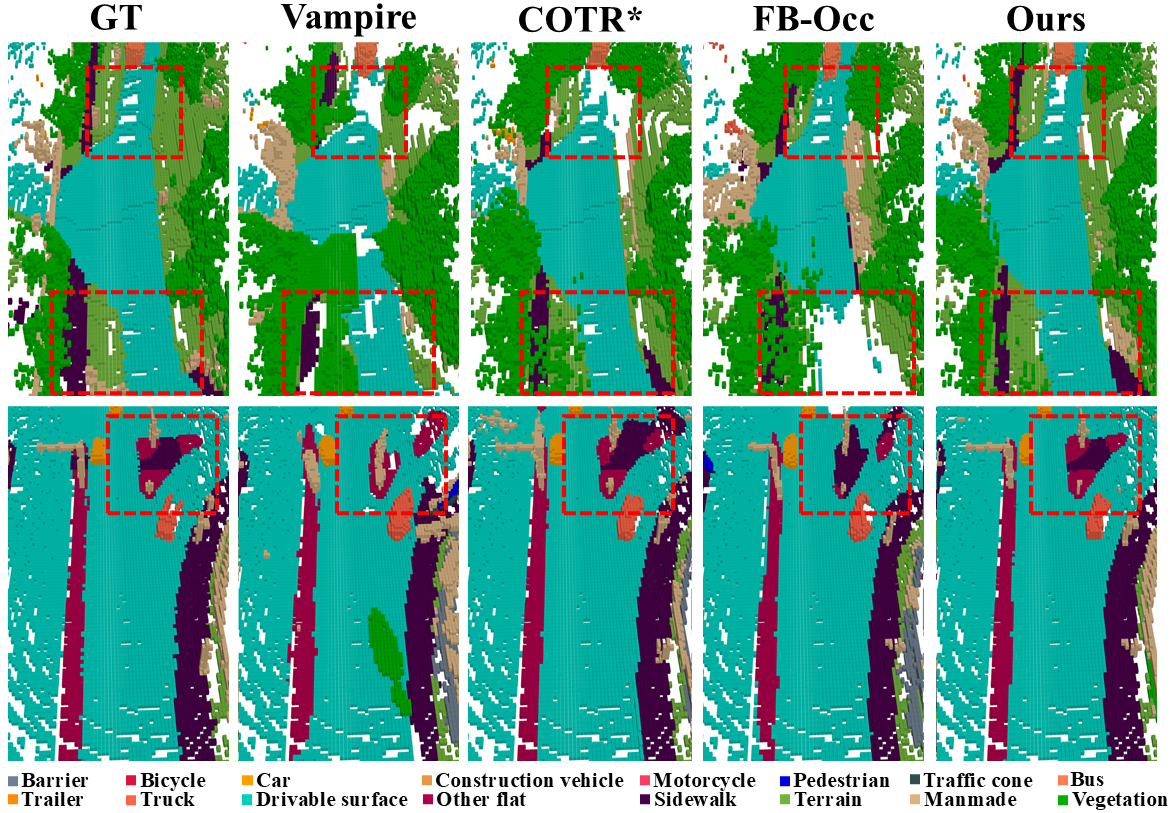

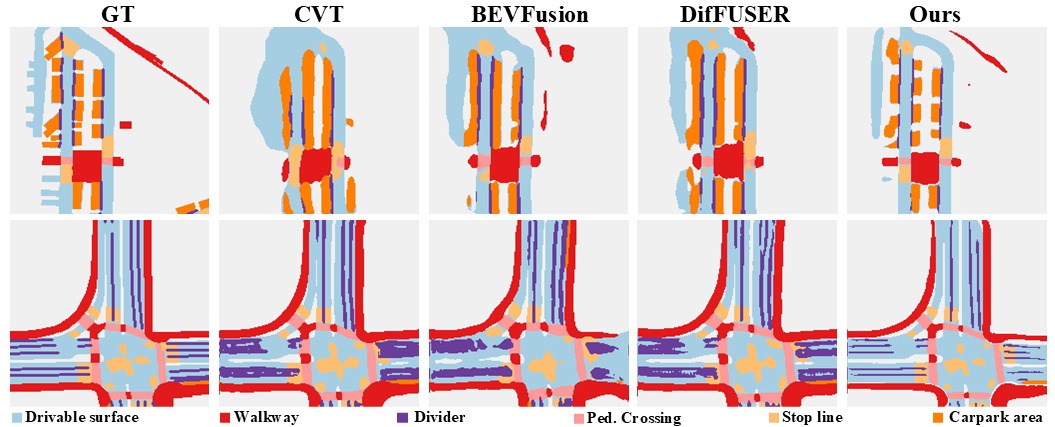

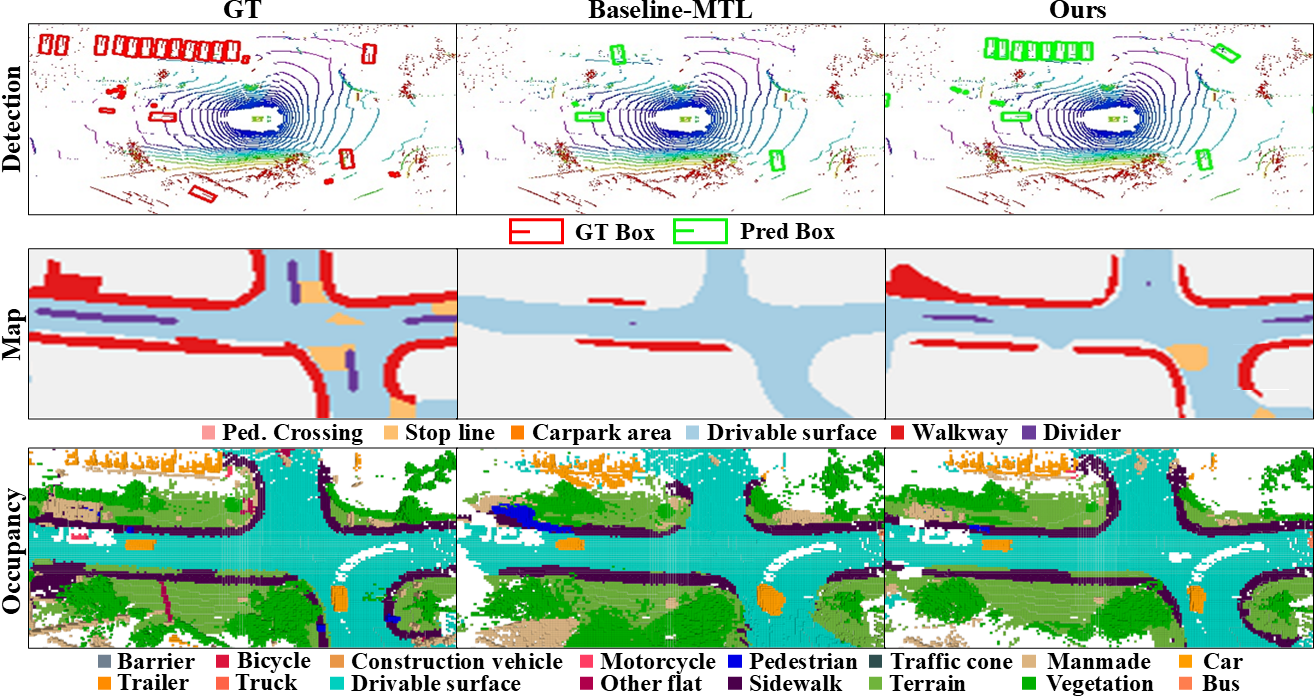

Qualitative Results

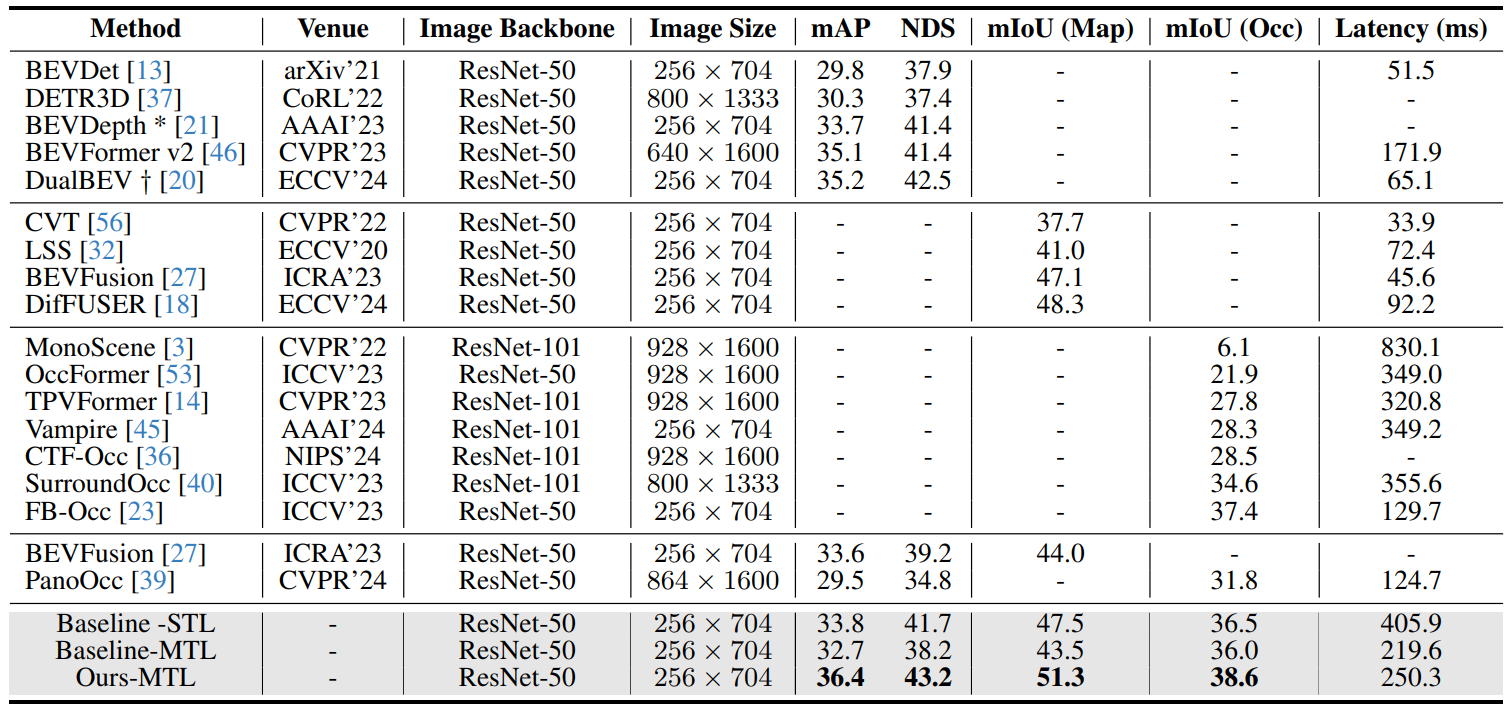

Quantitative Results

Additional Qualitative Results

Citation

@article{kang2025maestro,

title={MAESTRO: Task-Relevant Optimization via Adaptive Feature Enhancement and Suppression for Multi-task 3D Perception},

author={Kang, Changwon and Kim, Jisong and Shin, Hongjae and Park, Junseo and Choi, Jun Won},

journal={arXiv preprint arXiv:2509.17462},

year={2025}

}

License & Credits

This page’s HTML/CSS scaffold is adapted from an open academic project template (Nerfies-style). If you re-use this, keep attribution in the footer and remove any analytics snippets you do not intend to use.